COBYLA

Constrained optimization by linear approximation (COBYLA) is a numerical optimization method for constrained problems where the derivative of the objective function is not known, invented by Michael J. D. Powell. That is, COBYLA can find the vector with that has the minimal (or maximal) without knowing the gradient of . COBYLA is also the name of Powell's software implementation of the algorithm in Fortran.[1]

Powell invented COBYLA while working for Westland Helicopters.[2]

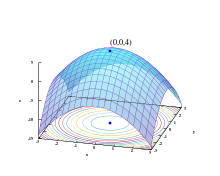

It works by iteratively approximating the actual constrained optimization problem with linear programming problems. During an iteration, an approximating linear programming problem is solved to obtain a candidate for the optimal solution. The candidate solution is evaluated using the original objective and constraint functions, yielding a new data point in the optimization space. This information is used to improve the approximating linear programming problem used for the next iteration of the algorithm. When the solution cannot be improved anymore, the step size is reduced, refining the search. When the step size becomes sufficiently small, the algorithm finishes.

The COBYLA software is distributed under The GNU Lesser General Public License (LGPL).[3]

See also

References

- ↑ Andrew R. Conn; Katya Scheinberg; Ph. L. Toint (1997). "On the convergence of derivative-free methods for unconstrained optimization". Approximation theory and optimization: tributes to MJD Powell. pp. 83–108.

- ↑ M. J. D. Powell (2007). A view of algorithms for optimization without derivatives. Cambridge University Technical Report DAMTP 2007.

- ↑ "Source code of COBYLA software". Retrieved 2015-04-30.

External links

- Optimization software by Professor M. J. D. Powell at CCPForge

- A repository of Professor M. J. D. Powell's software