Time delay neural network

Time delay neural network (TDNN) [1] is an artificial neural network architecture whose primary purpose is to work on sequential data. The TDNN units recognise features independent of time-shift (i.e. sequence position) and usually form part of a larger pattern recognition system. An example would be converting continuous audio into a stream of classified phoneme labels for speech recognition.

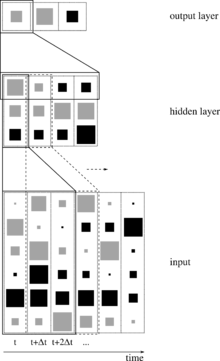

An input signal is augmented with delayed copies as other inputs, the neural network is time-shift invariant since it has no internal state.

The original paper presented a perceptron network whose connection weights were trained with the back-propagation algorithm, this may be done in batch or online. The Stuttgart Neural Network Simulator[2] implements that version.

Overview

The Time Delay Neural Network, like other neural networks, operates with multiple interconnected layers composed of clusters. These clusters are meant to represent neurons in a brain and, like the brain, each cluster need only focus on small regions of the input. A proto-typical TDNN has three layers of clusters, one for input, one for output, and the middle layer which handles manipulation of the input through filters. Due to their sequential nature, TDNN’s are implemented as a feedforward neural network instead of a recurrent neural network.

In order to achieve time-shift invariance, a set of delays are added to the input (audio file, image, etc.) so that the data is represented at different points in time. These delays are arbitrary and application specific, which generally means the input data is customized for a specific delay pattern. There has been work done in creating an adaptable time-delay TDNN.[3] where this manual tuning is eradicated. The delays are an attempt to add a temporal dimension to the network which is not present in Recurrent Neural Networks or Multi-Layer Perceptrons with a sliding window. The combination of past inputs with present inputs make the TDNN’s approach unique.

A key feature for TDNN’s are the ability to express a relation between inputs in time. This relation can be the result of a feature detector and is used within the TDNN to recognize patterns between the delayed inputs.

One of the main advantages of neural networks is the lack of a dependence on prior knowledge to set up the banks of filters at each layer. However, this entails that the network must learn the optimal value for these filters through processing numerous training inputs. Supervised learning is generally the learning algorithm associated with TDNN’s due to its strength in pattern recognition and function approximation. Supervised learning is commonly implemented with a back propagation algorithm.

Applications

Speech Recognition

TDNN’s used to solve problems in speech recognition were introduced in 1989[1] and initially focused on phoneme detection. Speech lends itself nicely to TDNN’s as spoken sounds are rarely uniform length. By examining a sound shifted in the past and future, the TDNN is able to construct a model for that sound that is time invariant. This is especially helpful in speech recognition as different dialects and languages pronounce the same sounds with different lengths. Spectral coefficients are used to describe the relation between the input samples.

Video Analysis

Video has a temporal dimension which makes a TDNN an ideal solution to analyzing motion patterns. An example of this analysis is a combination of vehicle detection and recognizing pedestrians.[4] When examining videos, subsequent images are fed into the TDNN as input where each image is the next frame in the video. The strength of the TDNN comes from its ability to examine objects shifted in time forward and backward to define an object detectable as the time is altered. If an object can be recognized in this manner, an application can plan on that object to be found in the future and perform an optimal action.

Common Libraries

- Matlab: The neural network toolbox has functionality designed to produce a time delay neural network give the step size of time delays and an optional training function. The default training algorithm is a Supervised Learning back-propagation algorithm that updates filter weights based on the Levenberg-Marquardt optimizations. The function is timedelaynet(delays, hidden_layers, train_fnc) and returns a time-delay neural network architecture that a user can train and provide inputs to.[5]

- Torch: The Torch library can create complex machines like TDNN’s through combining several built-in Multi-layer Perceptrons (MLP) modules.[6]

- Caffe: No support for TDNN’s at this time.

See also

- Convolutional neural network - a convolutional neural net where the convolution is performed along the time axis of the data is very similar to a TDNN.

- Recurrent neural networks - a recurrent neural network also handles temporal data, albeit in a different manner. Instead of a time-varied input, RNN's maintain internal hidden layers to keep track of past (and in the case of Bi-directional RNNs, future) inputs.

References

- 1 2 Alexander Waibel et al, Phoneme Recognition Using Time-Delay Neural Networks IEEE Transactions on Acoustics, Speech and Signal Processing, Volume 37, No. 3, pp. 328. - 339 March 1989.

- ↑ TDNN Fundamentals, Kapitel aus dem Online Handbuch des SNNS

- ↑ Wöhler, Christian, and Joachim K. Anlauf. "An adaptable time-delay neural-network algorithm for image sequence analysis." IEEE Transactions on Neural Networks 10.6 (1999): 1531-1536

- ↑ Wöhler, Christian, and Joachim K. Anlauf. "Real-time object recognition on image sequences with the adaptable time delay neural network algorithm—applications for autonomous vehicles." Image and Vision Computing 19.9 (2001): 593-618.

- ↑ "Time Series and Dynamic Systems - MATLAB & Simulink". mathworks.com. Retrieved 21 June 2016.

- ↑ Collobert, Ronan, Samy Bengio, and Johnny Mariéthoz. Torch: a modular machine learning software library. No. EPFL-REPORT-82802. IDIAP, 2002